Category Archives: Uncategorized

WEARHAP on italian press: “La ricerca fa show”

[ITA] September 26, 2013 – Il Corriere fiorentino – “La ricerca fa show”

HandCorpus

HandCorpus is a new repository, created by Matteo Bianchi from UNIPI and Minas Liarokapis from National Technical University of Athens, where everyone can freely share and search for different kinds of experimental data about human and robotic hands.

The repository is available at: http://www.handcorpus.org.

The goal is not only to allow researchers to replicate results for benchmarking, but also to reuse data from previous experiments. The HandCorpus provides an accurate and coherent record for citing data sets, giving due credit to authors. Data sets are hierarchically indexed and can be easily retrieved using keywords and advanced search operations. A blog, a newsletter, a publication repository and applications for mobile platforms and social networks are also provided.

In these days the authors are working on a new version of the repository. If you want, you can be part of the community and share your data. The authors will take care of them.

TEDxStanford 2013 – Allison Okamura

Fitbit Flex vs. Jawbone Up Reviews: The Battle of the Fitness Bracelets – ABC News

Lernstift is the vibrating pen that critiques your spelling video

Researchers say new development could give artificial skin a wider range of senses

WEARHAP: “Wearable Haptics for Humans and Robots”

The UNISI team is happy to annouce that the FP7-ICT-601165 Collaborative Project “WEARable HAPtics for Humans and Robots” (WEARHAP) is just started!

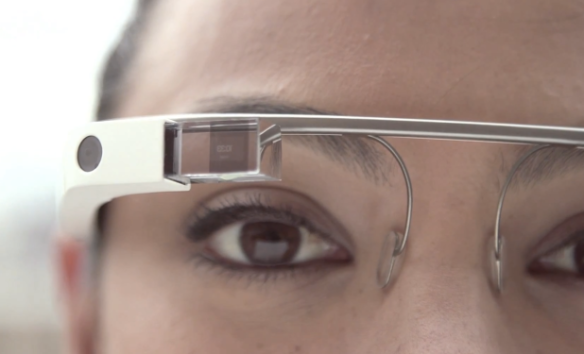

The project aims at laying the scientific and technological foundations for wearable haptics, a novel concept that will redefine the way humans will cooperate with robots. These technologies will bring to our skin the revolution that the Sony Walkman brought to our ears, and that Google Glasses plans to bring to our eyes.

The glasses designed by Google (Fig. 1) are extremely wearable and will be used to improve interaction with virtual environments and digital contents in augmented reality scenarios.

Video calls, maps, and other kinds of visual information, which became portable with the advent of smartphones and tablets, will become wearable in the future imagined by Google. The same happened to the audio signals in the second half of the twentieth century with headphones or earphones introduced by Sony with the Walkman.

WEARHAP will bring this revolution to our skin.

Figure 1: WEARHAP will bring to our skin the revolution that headphones brought to our ears (left), and that Google Glasses plans to bring to our eyes (center).

Despite the fact that haptic interfaces are now widely used in laboratories and research centers, their use in everyday life remains underexploited. The main reason is that traditionally they have been physically grounded and only recently they have been designed to be portable. Project WEARHAP will change the way of using and interpreting haptics for interaction: from grounded and portable paradigms to more complex, integrated and wearable systems.

Figure 2: Grounded haptics (left), exoskeletons (center) and wearable haptics (right). In wearable haptics the exoskeleton is removed and the wearability is improved at the cost of losing most of the kinesthetic component of the interaction.

Imagine a world in which wearable haptic systems allow you to perceive, share, interact and cooperate with someone or something that is miles away from you. Think to the variety of new opportunities wearability will bring at our fingertips in cooperation, rescue robotics, health, social interaction, remote assistance and in much more intriguing scenarios we can now only envisage.

In order to highlight the benefits which characterize the WEARHAP technology, selected scenarios from the application fields of robotics, social media and health sciences will guide the WEARHAP investigations.

In particular, these wearable technologies will be employed for

– Haptic communication with cognitively impaired subjects: project WEARHAP will provide effective scientific tools for exploiting haptic stimulation to actively elicit patients with severe brain damage, in order to communicate with them and hopefully improve their level of consciousness.

– Tangible videogames: WEARHAP systems will enrich videogames with the possibility of touching directly the content with our hands and body, without any workspace restrictions, resulting in a more immersive gameplay.

Figure 3: The main representative scenarios. In robotics: human-robot interaction and cooperation. In health and social services: communication for social and health care and gaming for social and augmented interaction.

The Consortium is composed by:

University of Siena (coordinator), led by Prof. Prattichizzo;

University of Pisa, led by Prof. Bicchi and Prof. Scilingo;

University of Bielefeld, led by Prof. Ernst and Prof. Ritter;

Technical University Munich, led by Prof. Hirche;

Sant’Anna School of Advanced Studies, led by Prof. Bergamasco and Prof. Frisoli;

Foundation for Research and Technology Hellas, led by Prof. Argyros;

Universidad Rey Juan Carlos, led by Prof. Otaduy;

Italian Institute of Technology, led by Prof. Darwin and Dr. Tsagarakis;

Université Pierre et Marie Curie – Paris, led by Prof. Hayward;

Umeå University, led by Prof. Edin.

The WEARHAP project will last for 4 years and will benefit of a 7.700.000€ fundings from the European Union, with a total cost of 10.038.580€. The kick-off meeting is scheduled for the 29 – 30 of April in Siena, Italy.